Study of Video-driven 2D Character Animation Generation Method

Abstract

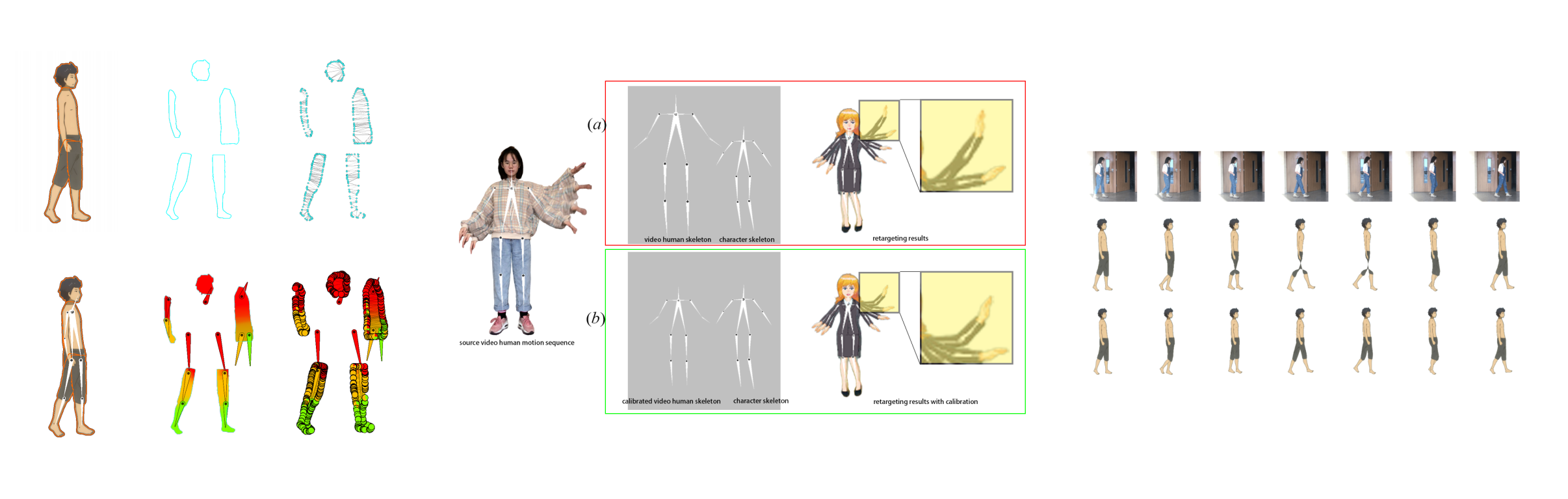

Video-driven animation has always been a hot and challenging topic in the field of computer animation. We propose a method of mapping a sequence of human skeletal keypoints in a video onto a two-dimensional character to generate 2D character animation. For a given two-dimensional character picture, we extract the motion of real human in video data, driving the character deformation. We analyze common two-dimensional human body movements, classify the basic posture of the human body, realize the recognition of skeleton posture based on back propagation network, capture human body motion by automatically tracking the position of the human skeleton keypoints coordinates in the video and redirect the motion data to a 2D character. Compared with the traditional method, our work is less affected by video data illumination and background complexity. We calibrate human body motion in videos to a 2D character according to the skeleton topology to avoid motion distortion caused by the difference in skeleton size and ratio. The experimental results show that the proposed algorithm can generate the motion of two-dimensional characters based on the motion of human characters in video data. The animation is natural and smooth, and the algorithm has strong robustness.

Conclution and Future work

This paper proposes a method of mapping human motion data in a video onto a two-dimensional character to generate character animation. We statistically analyze common two-dimensional human body movements, classify the basic posture of the human body; design and implement the method of human body posture recognition based on the skeleton information on images and videos; propose a geometric calibration method based on the tree structure to correct motion reorientation of the bones, obtaining a good skeleton-driven deformation effect, and generating high quality animation in the same posture. This method can be used to auto-produced animation, which need fewer user interaction. Although our method maps the sequence of human skeletal postures in the video onto a two-dimensional character, resulting in a high quality animation, there is some room for improvement: 1) Scope of application. Our method is only for 2D human characters, cannot be extended to other types of images such as animals and plants; 2) Physical simulation. We didn’t take some phenomena such as hair, clothes into consideration, thus these deformation was not natural enough, as shown in Fig.14(e), the man’s hair deforms in a stiff way. In the future, we will try to improve the issues discussed above, an approach for animals and plants animation will be adapted by this method, physical simulation will be used to get higher quality results. On the other hand, we will also integrate all methods into one interactive animation system.